Validate

What is Validate in SnapAttack?

For a walkthrough on how to launch an attack simulation and review the results, check out our tutorial below:

Validation is a process within the SnapAttack platform that can be used to measure the health of detection content and integrations. Detections can have logical or syntactical errors that prevent them from firing, or the telemetry data may not be collected or flowing properly all the way back to your SIEM. To know that content is validated is to know that it works in real world environments. In other words, a validated detection picks up on threat activity as intended, and is therefore a true positive alert when and if it fires.

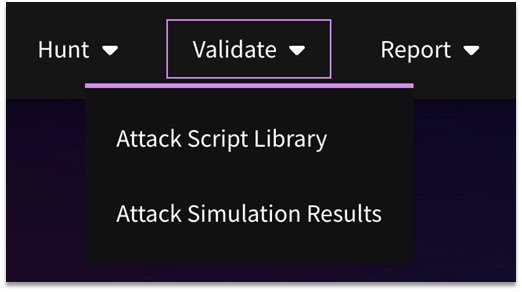

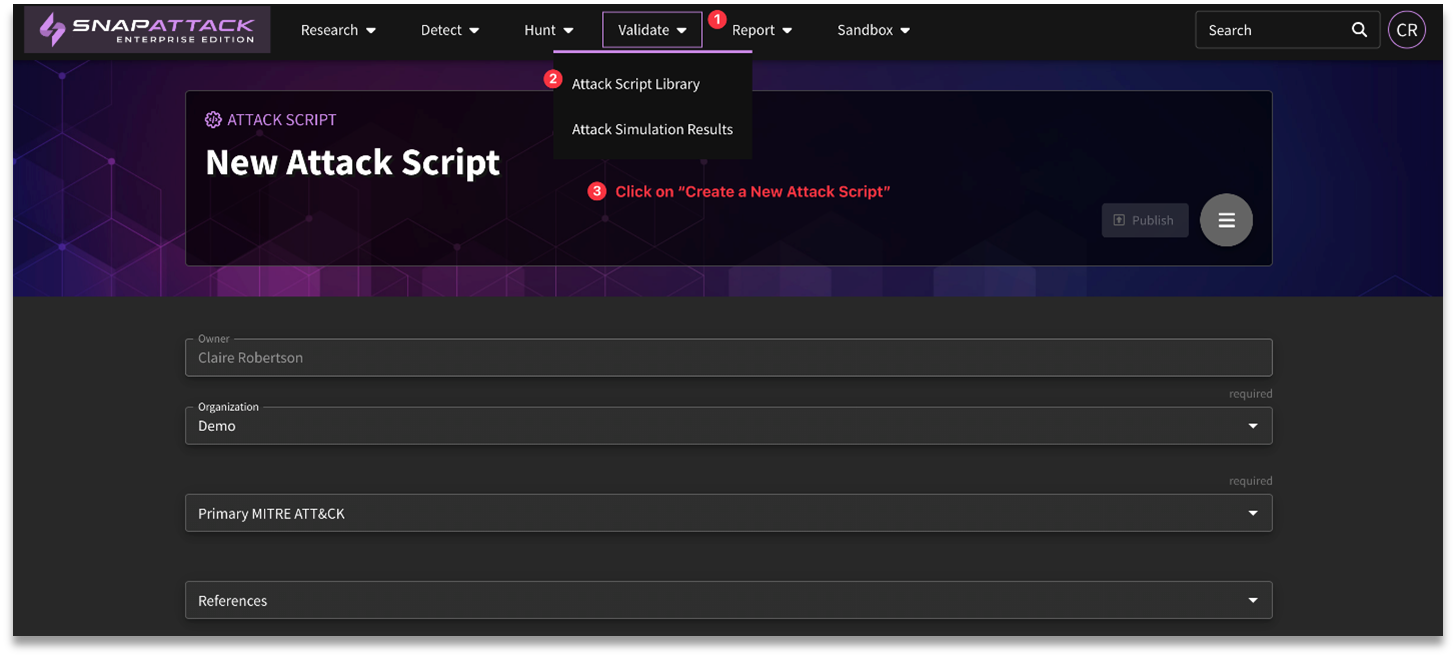

The Validate tab provides users with two navigational options: the Attack Script Library and the Attack Simulation Results repository.

- Attack Script Library: SnapAttack's attack script library provides ready-to-use attack scripts (formatted as attack-as-code) which can be exported and run within a user's environment to be used as a diagnostic tool to measure detection coverage or used as a validation module for end-to-end testing.

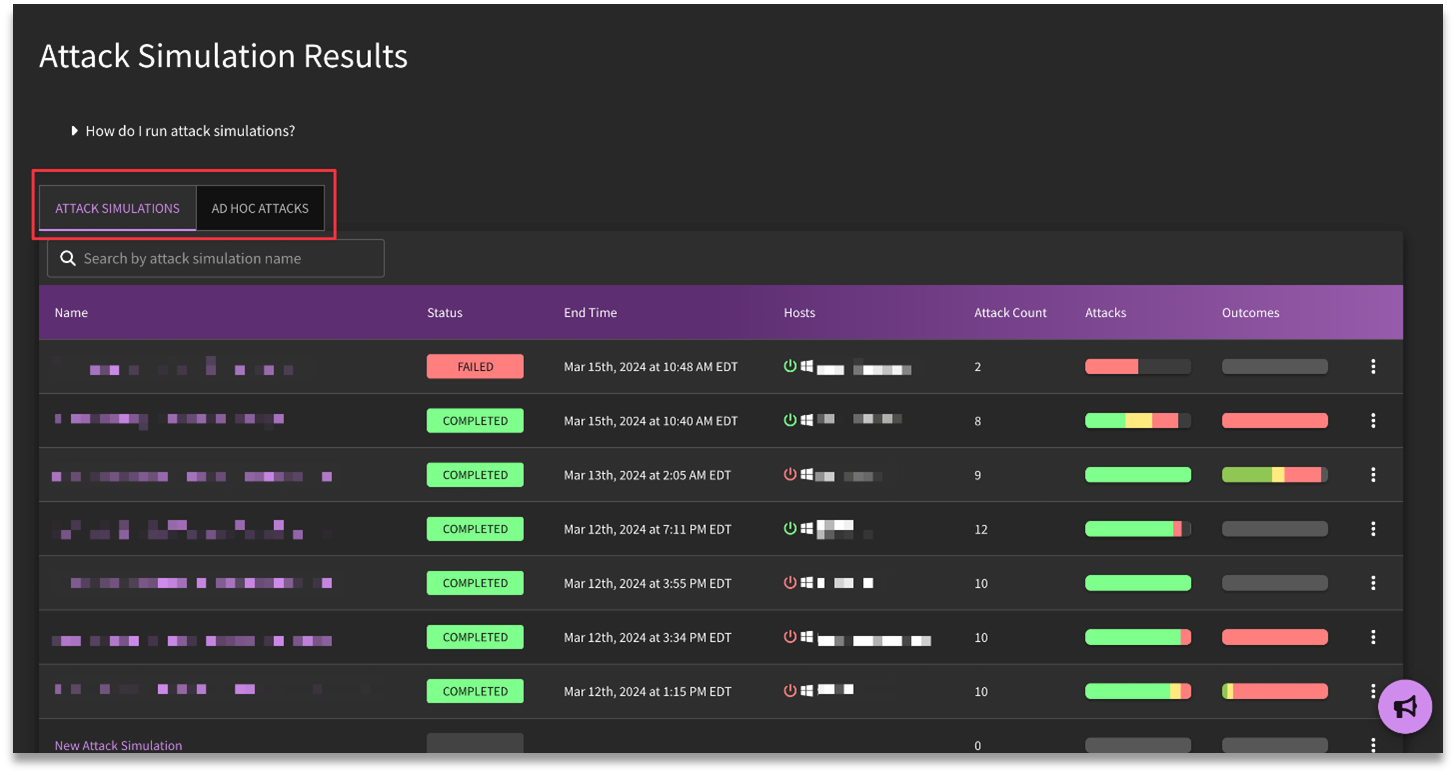

- Attack Simulation Results: The Simulation Results page provides users with access to the resulting metadata from either method of validation: Attack Simulations (Attack Plans) or Ad-Hoc Attacks.

- Simulations: If a user is engaged in running attacks in bulk, the results would be available on this tab.

- Ad-Hoc Attacks: Conversely, results from singular attacks are located under "Ad-Hoc Attacks".

What Validate Isn't

This can be confusing, but CapAttack and Validate are not the same. While both functionalities can be used emulate adversarial activity, the captured threats from CapAttack are meant to document malicious activity and provide true positive examples in our threat library to help measure what detections are available, while Validation (Validate) is meant to be tailored to your environment and test the viability and robustness of your detection content in production. In other words, CapAttack is "input", while Validate is "output".

Some potential use cases that would not be appropriate for Validate are the following:

- Automated penetration testing or red team simulations

- Automated utilization of different frameworks such as Cobalt Strike, Empire, and Metasploit

- Safely executing real malware/ransomware within a controlled environment

- Series of attack sequences, where the output of one test serves as the input for another

- Support for macOS or Cloud environments (as of the current date)

Common Use Cases of Validate

- Validate a native control or deployed SnapAttack detection through an attack test case

- Develop an attack test case targeting a novel threat

- Validate native controls or deployed detections pertaining to a particular subject through executing an attack plan

- Devise an attack plan focusing on a specific subject

- Implement automated outcome tracking through API integrations

- Assess outcomes from a designated test case or attack plan

- Analyze and contrast outcomes from a designated test case or attack plan across different time frames

- Establish recurring tests to ensure continuous validation and detect alterations in security outcomes

- Monitor and document changes in continuous validation over time

- Take necessary action to address any identified gaps (e.g., deploying detections, implementing mitigation measures, applying patches, etc.)

How to use Validation in SnapAttack

There are various layers to the Validation process:

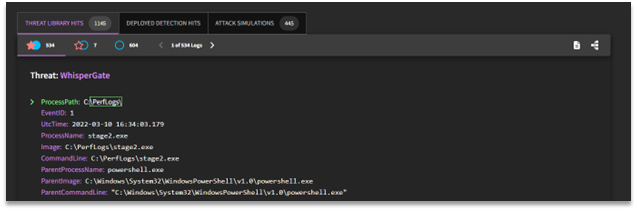

Threat Library Validation

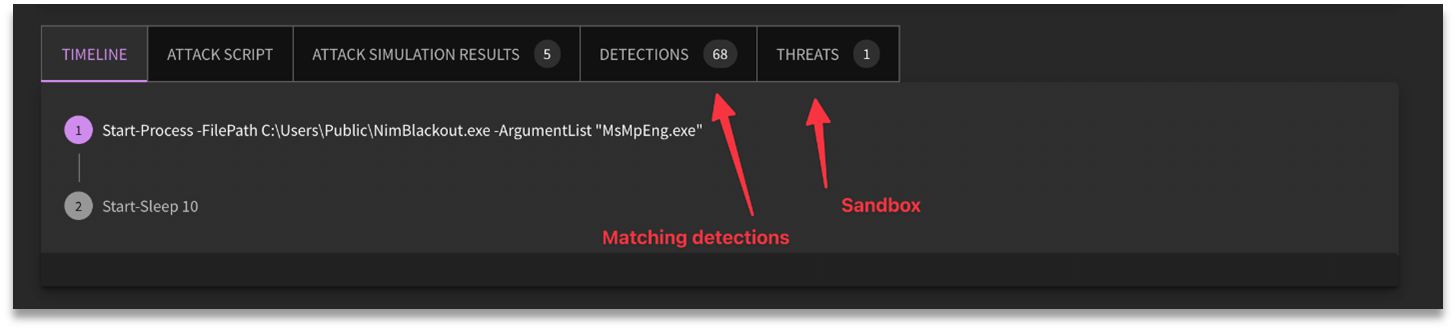

For customers who do not have integrations hooked up, this method would be the suggested way to interact with validated content. All detections are tested against the SnapAttack Threat Library, and Threat Library Hits (formally "Matching Logs") can serve as an easily implemented validation process. This is because Threat Library Hits bubble up true positive results that are tied to the behavioral detection.

However, this method does not offer the ability to validate native detections, or consider log source availability or the data engineering pipeline. A more robust plan of attack (no pun intended) is to utilize SnapAttack's Validate agent as illustrated below.

Validate Agent (Attack Simulations)

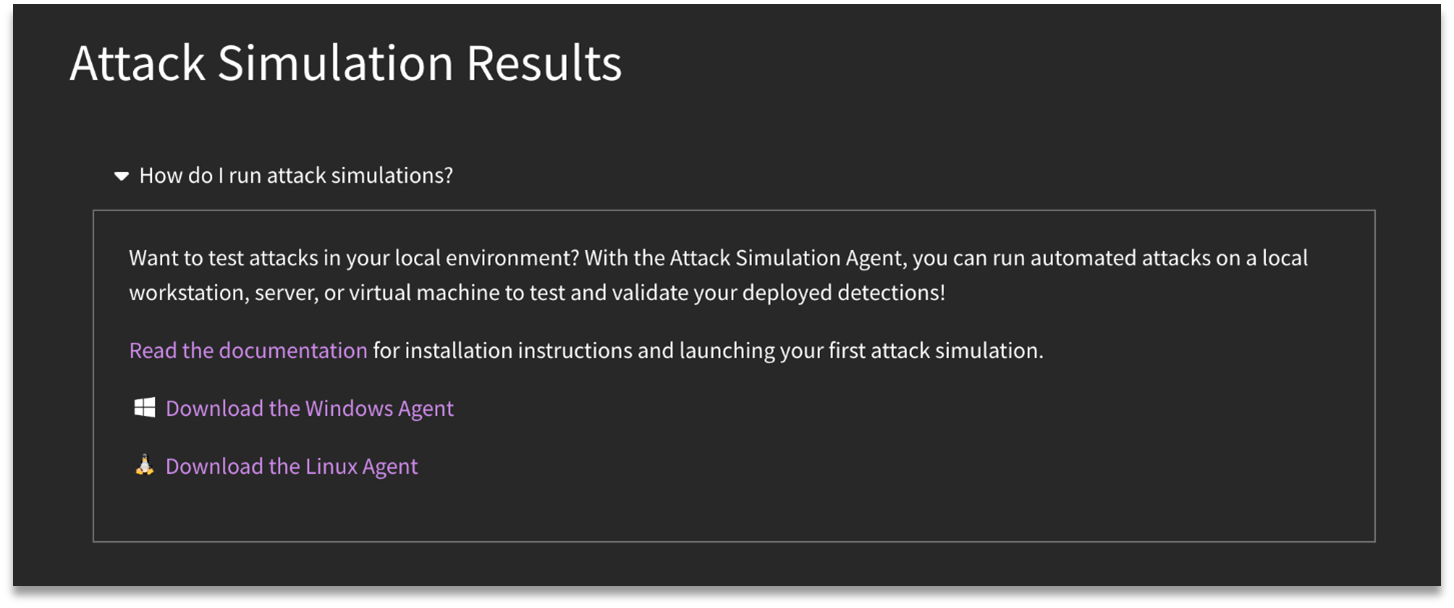

To set up the Validate agent, navigate to the Attack Simulation Results page to access the embedded installation documentation. Note that there are agents available for both Windows and Linux platforms.

Once the installation process is complete, the agent will reach out every twenty seconds (or so) to SnapAttack to grab any attacks that are meant to be run. These attacks, or attack scripts, are in the accompanying library.

What are Attack Scripts?

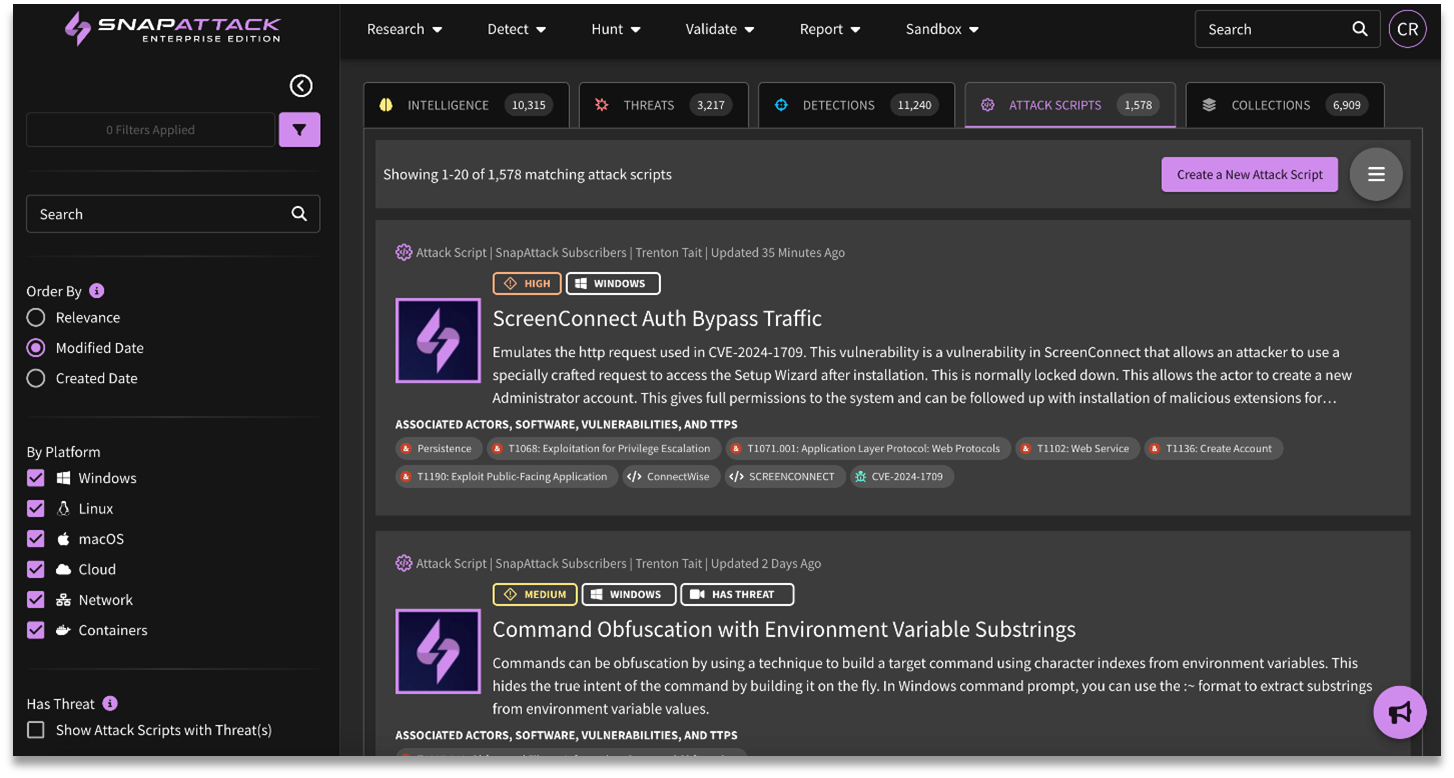

SnapAttack's attack script library contains over 1,500+ launchable attacks, originating from both the in-house research team and Red Canary's Atomic Red Team.

Attack scripts are linked to threats in SnapAttack's Threat Library. As such, they allow for certain threats in the library to be "exported" from SnapAttack and run in a customer's environment. We also use this process to validate and recommend detections based off of the Threat Library Hits.

There are four distinct pieces to an attack script, as illustrated below:

Dependencies: Prerequisites needed for the attack to be launched, such as:

- Elevated privileges (Administrator, SYSTEM, or root level access)

- A domain-joined machine, or specific domain configurations

- User account credentials

- Specific operating system or application versions

- Specific software or dependencies installed

- Specific system configurations, or misconfigurations if exploiting weakness or vulnerabilities

Failure of a dependency or prerequesite may not be a bad thing. Often times, it may mean that test case is not applicable to your environment.

Attack Commands: The step-by-step commands to reproduce the attack, such as vssadmin.exe create shadow /for=#{drive_letter}

Validation: The ability to test whether an attack script completed successfully which includes success criteria and can look at the exit code of the process, check standard out/error for specific strings, or more complicated tests such as checking file or registry contents.

Success Criteria

Success criteria is an optional feature provided by SnapAttack. This allows you to write checks in the attack script to verify if pieces or the whole code has run as intended. See More of Success Criteria for examples. If the attack script is from the Atomic Red Team project, there is no self-knowledge of success, so SnapAttack supplies success criteria for their scripts.

Cleanup: Commands used to modify/remove the resulting attack script data post-validation in an attempt to restore the system to a stable state. This may not work in all cases, and attack scripts should not be run on business critical machines in production.

Creating an Attack Script

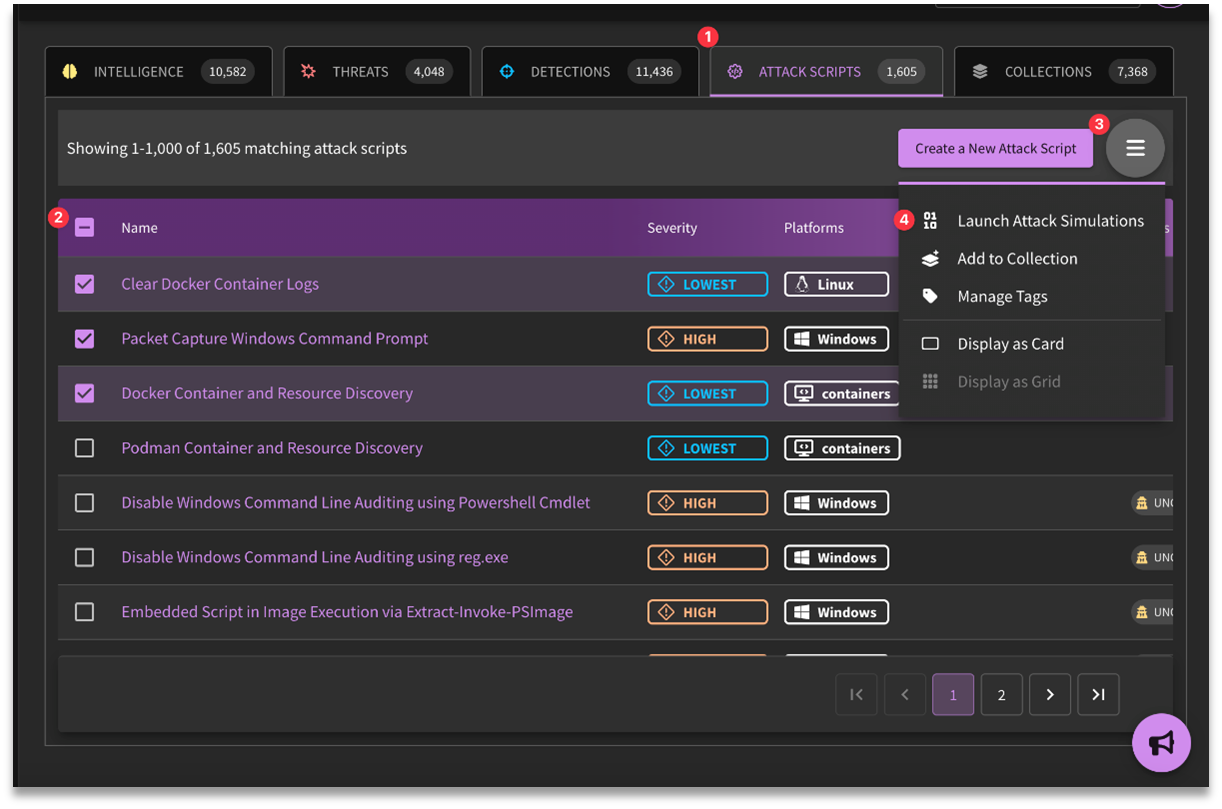

One of the features of SnapAttack's Validate module is it allows users to create their own attack scripts. To create a new script, click on the "Create a New Attack Script" button from the Attack Script Library page.

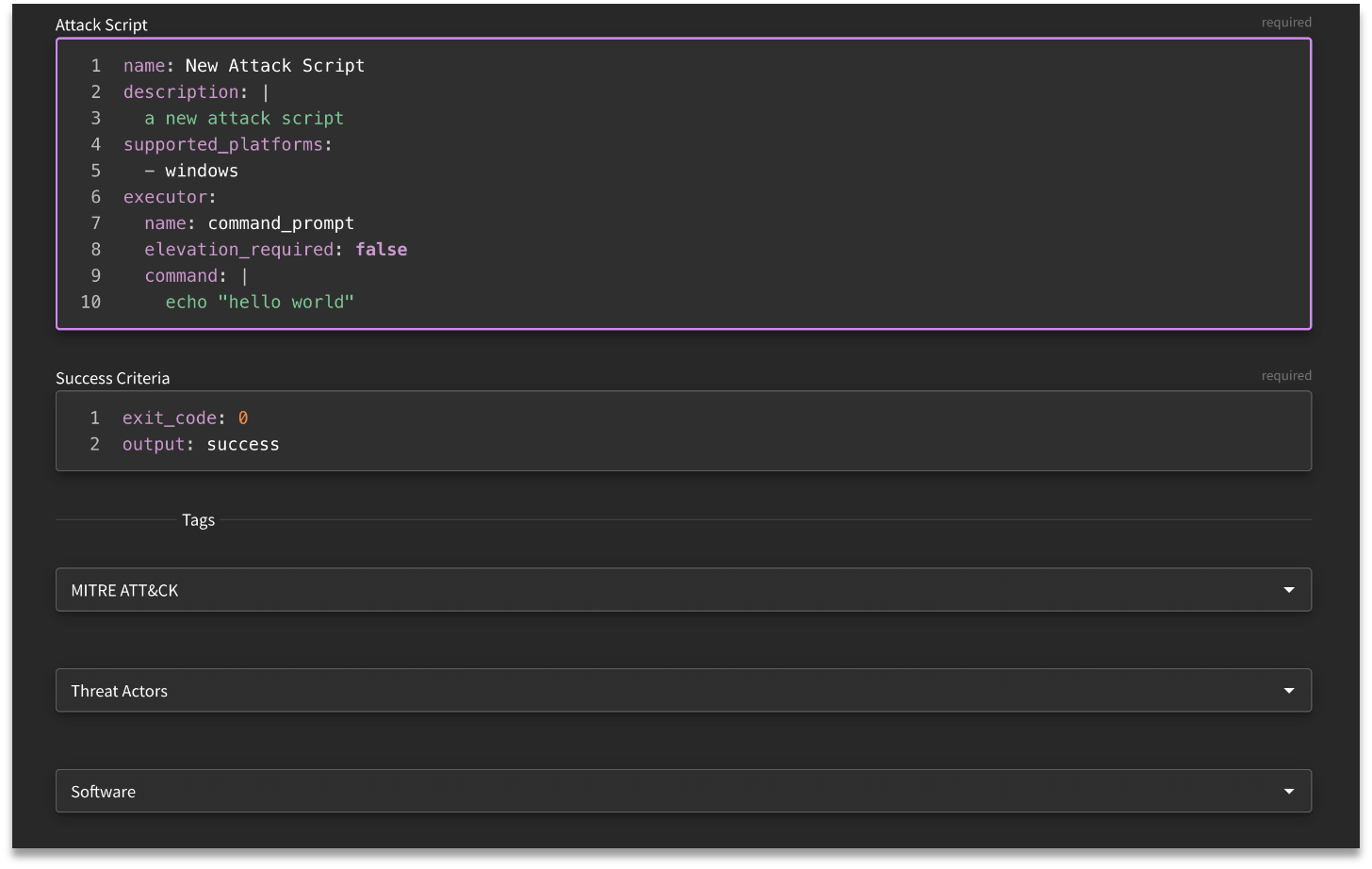

As we build off of the Atomic Red Team convention, you will need to provide a primary MITRE ATT&CK tag for the attack script, though other tags can also be specified. Moreover, optional fields are available for completion, allowing for the inclusion of supplementary reference materials to enhance contextual understanding of the threat, as well as any additional tags related to ATT&CK, Threat Actors, Malware, and Vulnerabilities.

The required fields are templated within the text editor:

- mame: Title of the Attack Script (i.e. Windows Streaming Service Privilege Escalation)

- description: High level context of the threat

- supported_platforms: Prerequisite system requirements

- executor: Step-by-step commands to execute the attack

- name: The shell which will be used to execute the command (i.e., command_prompt, powershell, etc.)

- command: The command(s) to be run for each step of the attack.

NOTE: If a command has a lengthy run time, the platform may time out. In the event of a timeout, the command may need to be adjusted.

More on Success Criteria

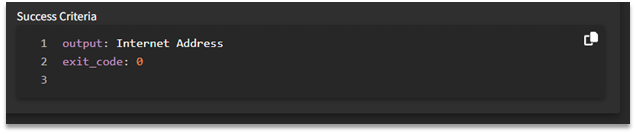

SnapAttack provides a flexible mechanism to determine if attack scripts completed successfully or not. But what is involved with success criteria creation?

- Success criteria are an optional set of conditions to confirm an attack script ran successfully.

- All conditions MUST be met to return a successful status, so the more conditions, the more confirmation you will have that the script ran as intended.

- Success criteria must have at least one

exit_codeoroutputcondition.exit_codeis satisfied if it exactly matches the exit code returned by the attack script's regular command.outputis satisfied if the given string is anywhere in the output of the attack script's regular command (standard output and standard error are combined). - There are also

follow_upconditions. These run a command in between an attack script's regular command and its cleanup command. They require a command, which uses the same executor and elevation settings as the attack script's regular command. They also require at least oneexit_codeoroutputcondition, which work the same as the conditions described above but applied to the follow-up command. These can be used to check the contents of files, registry settings, etc.

Example:

exit_code: 0

output: The command completed successfully

follow_up:

⠀⠀command: type.exe %TEMP%\output.ext

⠀⠀output: password

Recommended Workflows

General Workflow

Install Validate Agent → Attack Script Library → Filter → Grid View/Single Attack → Launch Attack Simulation → Save → Review results under Attack Simulation Results → Determine Outcomes → Deploy Recommended Detections/Export Report

These collections can be helpful when getting started.

- Getting Started with SnapAttack Validate Attack Simulation: This collection contains Attack Scripts and recommended detections to help you get started validating your detections end-to-end. The threats in the collection are hand-picked to represent a variety of ATT&CK techniques and come with detections that SnapAttack has validated against them.

- EDR Field Mapping Validation Attack Simulation: This collection contains validations and detections intended to insert and search for values mapped from the top fields across all EDR SnapAttack detections.

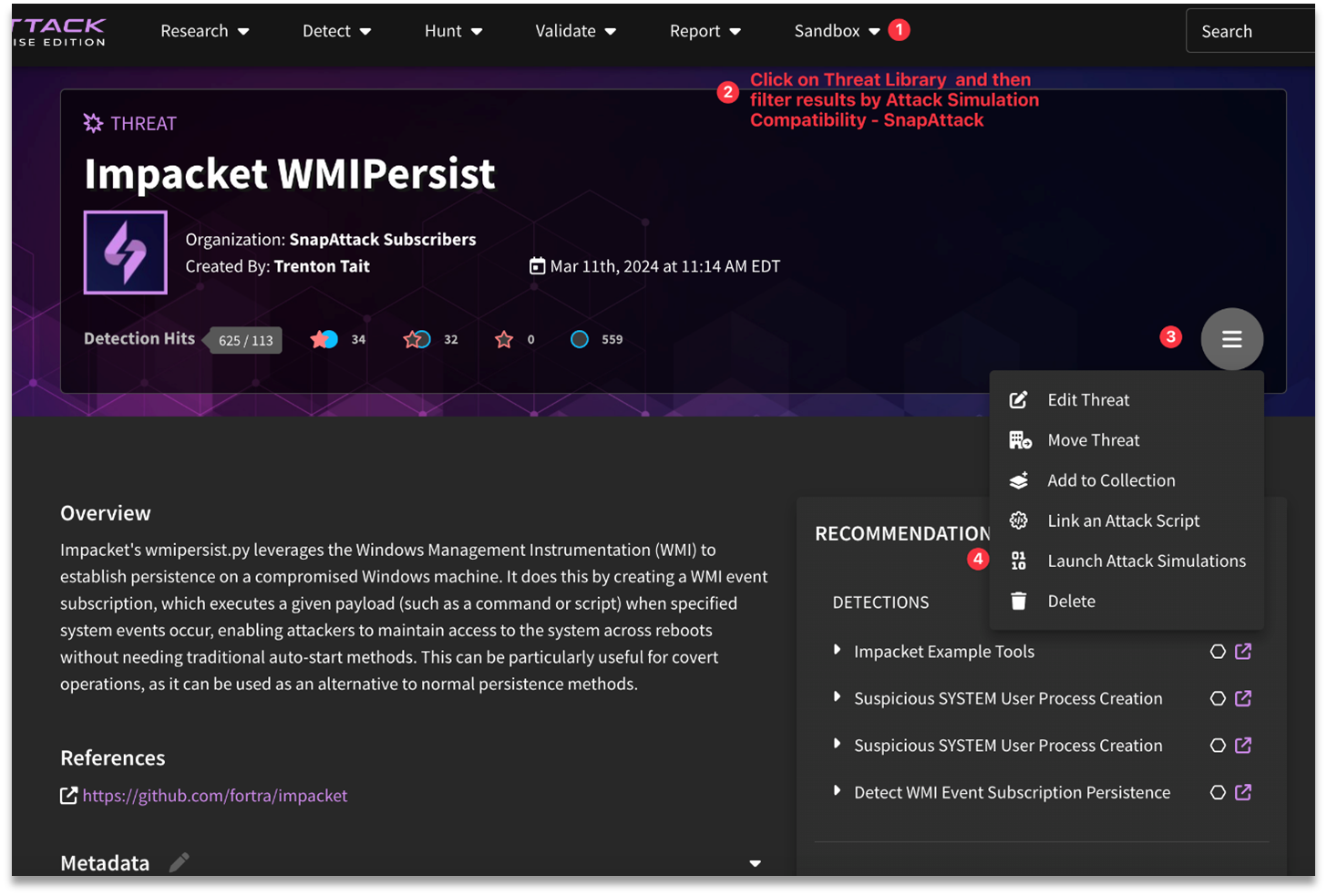

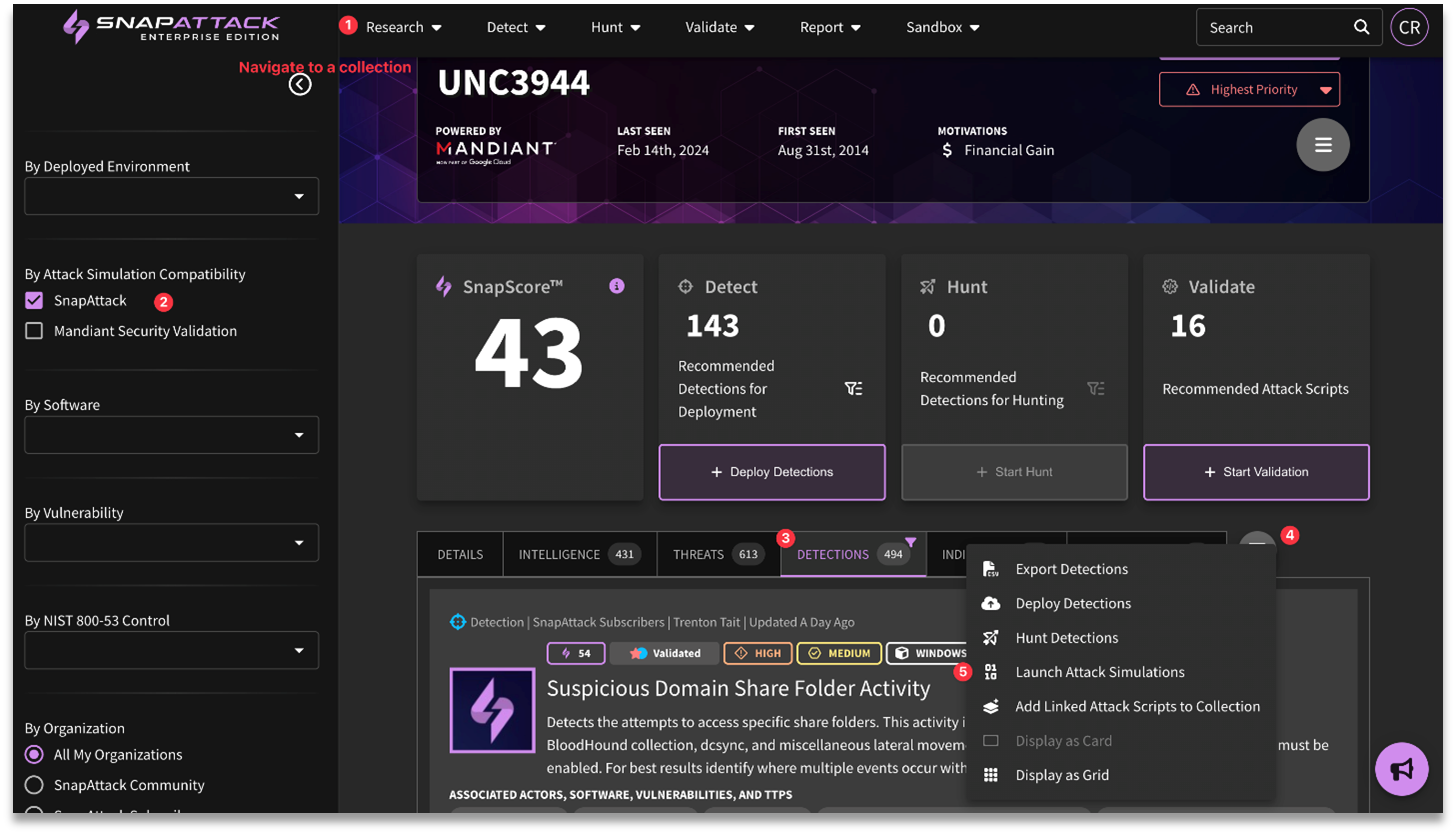

Launching Attack Simulations / Ad-Hoc Attacks

Attack simulations can be launched from:

- Threat, Detection, or Validation Feed → filter/select items → hamburger menu → Launch Attack Simulation - This will create an Attack Simulation if multiple options are selected.

- Threat, Detection, or Validation page → hamburger menu → Launch Attack Simulation - This will create an Ad-hoc Attack

- Inside a Collection's Threats, Detections, or Attack Scripts tabs → filter/select items → hamburger menu → Launch Attack Simulation - This will create an Attack Simulation if multiple options are selected

Post-Validation Processes

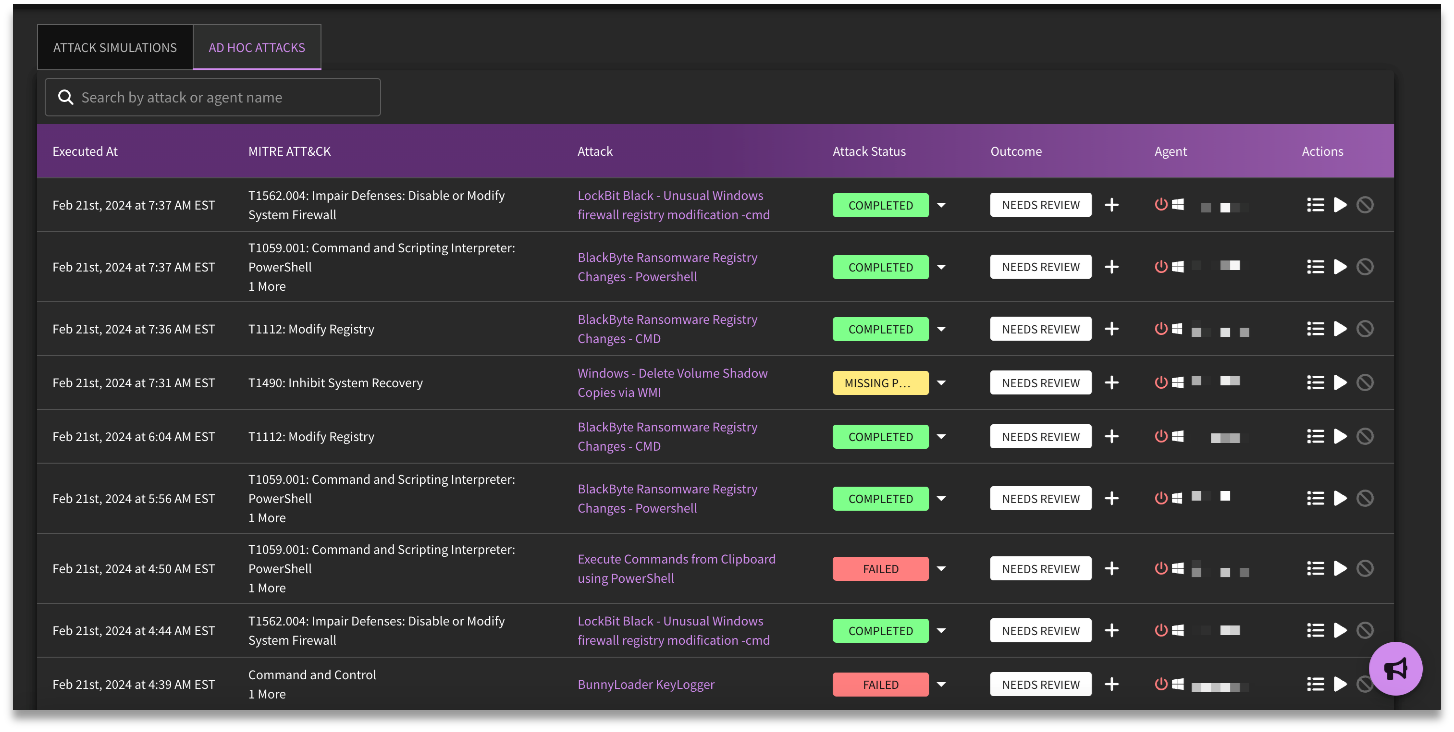

Users who have completed an attack simulation can review the results under Attack Simulation Results. Note that jobs are separated by "Attack Simulations" and "Ad-hoc attacks".

Top Level Actions

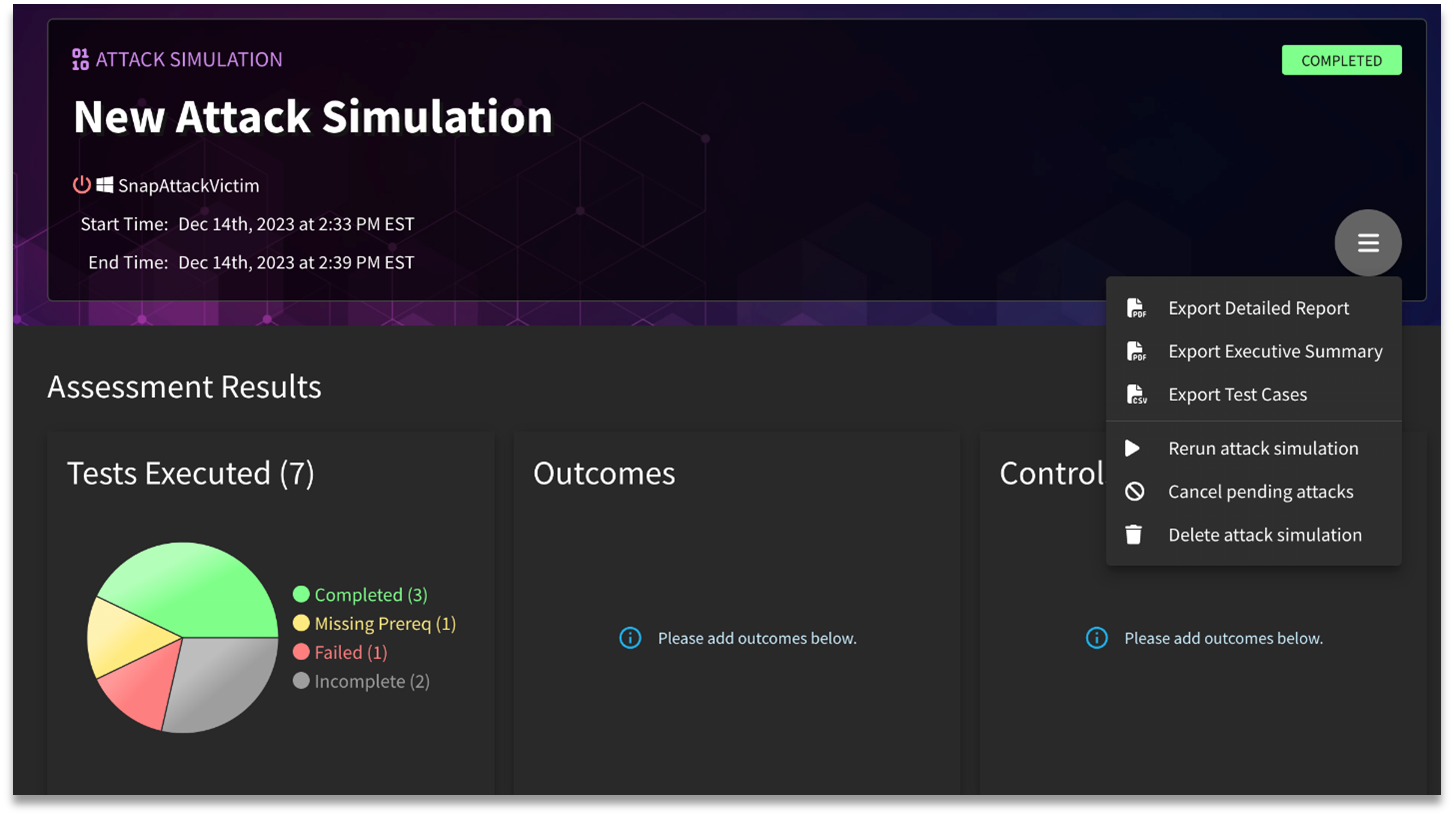

Attack simulation results are meant to be shared/utilized, so there are various options available to users:

- Export Detailed Report: The final deliverable containing all findings from the attack simulation, including the attack logs from Detailed Results.

- Export Executive Summary: The final deliverable containing only the Assessment Results, Deployed Detections, MITRE ATT&CK Coverage, Recommended Detections, and Test Summary.

- Export Test Cases: The test cases from Detailed Results available as JSON object or CSV file.

- Rerun attack simulations: One-click option to rerun the attack simulation

- Canceling pending attacks: Any attacks that are still pending are able to be cancelled.

- Delete attack simulations: Delete attack simulation altogether.

Assessment Results

Once an attack simulation has been completed, users can review the resulting stats (illustrated above). Under Tests Executed, users can see how many scripts were run, along with the final status of those tests (Completed, Missing Prereq, Failed, and Incomplete). Outcomes provides a visualization for the tracked detection outcomes for the attack simulation in question (Prevented, Detected, Logged, No Action, and Not Tested). Finally, Controls refers to the identified security controls (aka detections) and tooling used to respond to the simulation results.

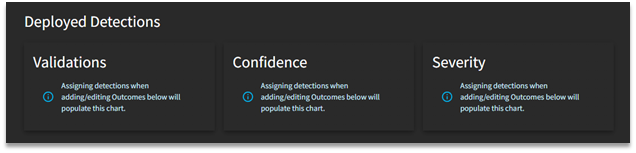

Deployed Detections

This section will be filled out once detections are assigned to attacks. This will provide more context into validation status, confidence levels, and severity designations.

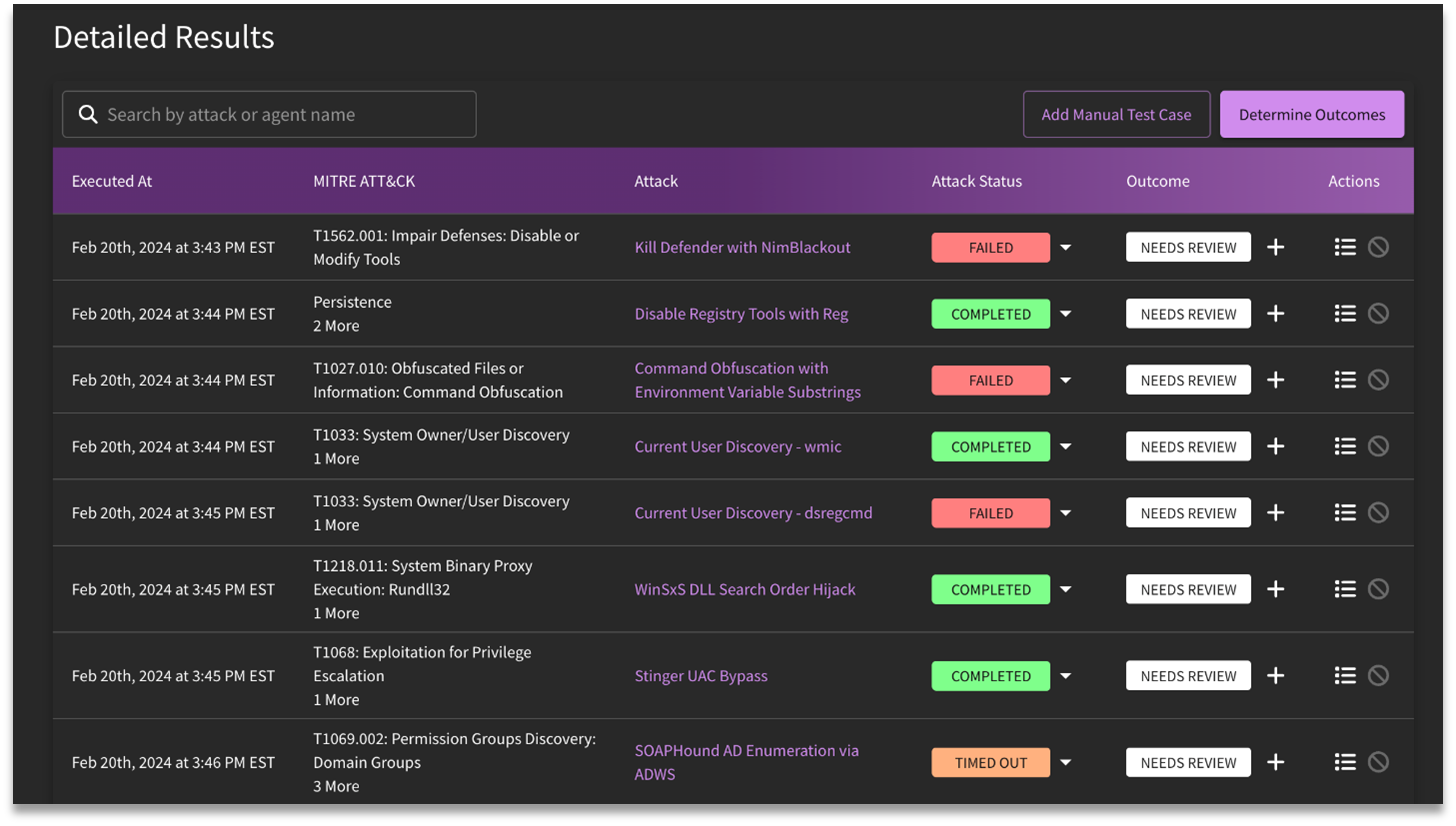

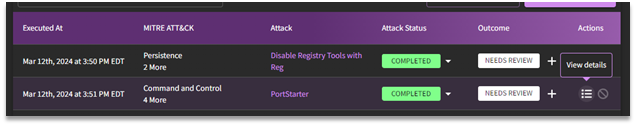

Reviewing Detailed Results

The next step of the validation process is to review the results post-attack simulation. Select the desired attack simulation job to access the resulting report.

Detailed results for attack plans (listed under Attack Simulations) will provide a breakdown of each attack that was launched, along with the target MITRE ATT&CK technique, and various selections that require human intervention (Attack Status, Outcome, Actions, Determine Outcomes, and Add Manual Test Case).

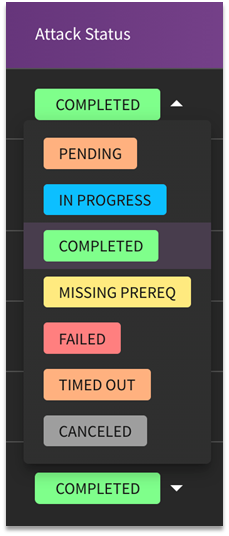

Attack Status

The selections available for Attack Status are as follows:

- Pending: An attack has been queued.

- In Progress: The attack simulation is currently in progress.

- Completed: The simulation has been completed.

- Missing Prereq: Based on the prerequisites provided prior to the beginning of the simulation, the agent determined that they were missing, and thus, the simulation failed to execute.

- Failed: The simulation failed.

- Timed Out: Due to outside factors, the agent was not able to complete the simulation due to a time out.

- Canceled: The simulation was cancelled before it was completed.

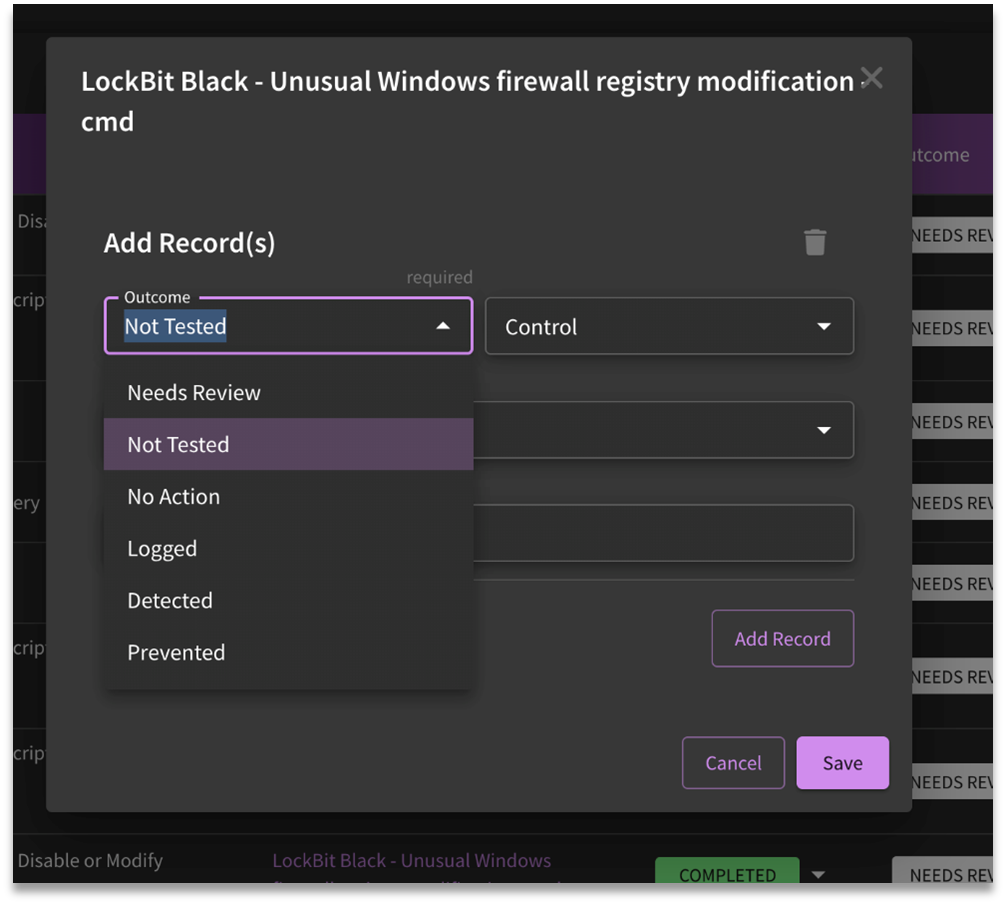

Outcomes

The available selections for Outcome are as follows:

- Needs Review: The default state. The test case was run, and someone needs to look at the outcome.

- Not Tested: Some test cases have pre-requisites that must be met (an application must be installed, a computer must be joined to a domain, a specific OS version needs to exist). If that doesn't get met, we skip over the test.

- No Action: SnapAttack did not see any evidence of the attack from connected integrations (no logs or telemetry data related to the attack).

- Logged: SnapAttack saw event logs in your configured integration (SIEM/EDR) that could be used for threat hunting/alerting. No alert was generated.

- Detected: A SnapAttack-deployed detection would have triggered an alert in the integration.

- Prevented: Something took an active defense that prevented the attack from completing successfully (e.g., EDR or Anti-Virus action).

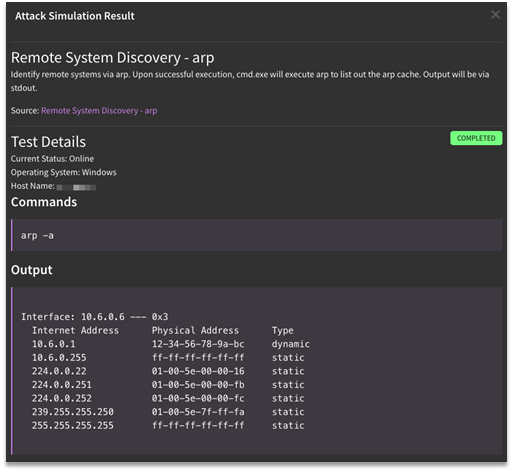

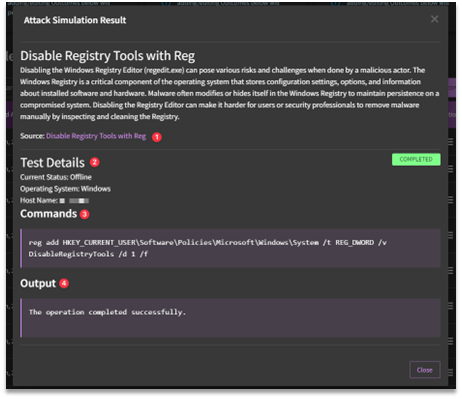

View Details

Users can access the underlying context of the attack used in the simulation under View Details.

- Source: The source of the attack is hyperlinked in the detailed output.

- Test Details: Includes the current status of the virtual machine, what type of OS it is using, and the host name.

- Commands: Details on the command executed during the attack are listed under Commands.

- Output: Information listed under Output is in relation to the standard output of the attack.

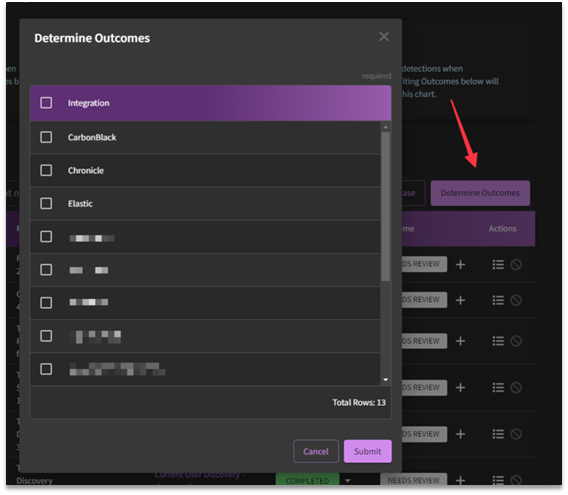

What is Determine Outcomes?

In the past, attack simulations only provided reports on whether the attack succeeded or failed. While this information is crucial, the primary objective is to ascertain the defensive outcome: whether the attack was thwarted or detected. Are our logs visible and covering these outcomes? Now, with the click of a button labeled "Determine Outcomes" and the selection of your integrations, you can automatically assess detection outcomes for a configured integration.

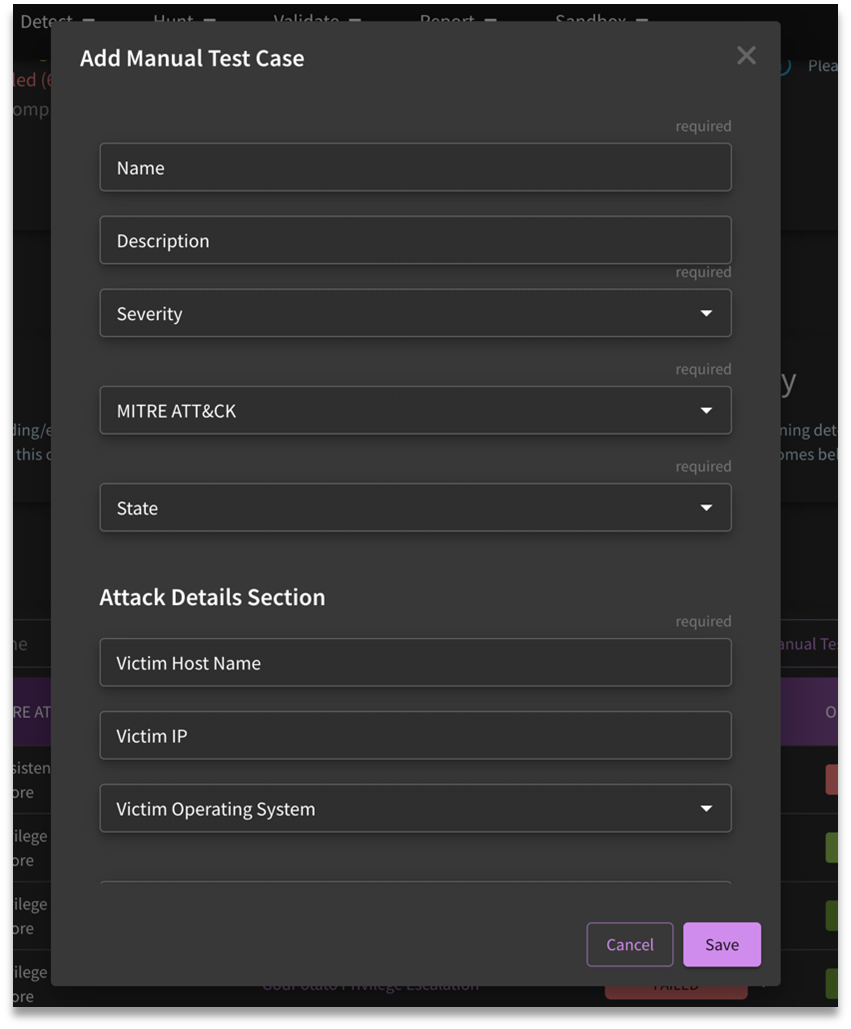

What Does Add Manual Test Case Do?

Manual Test Cases are ways in which customers can document red team activity or tests that are difficult to automate.

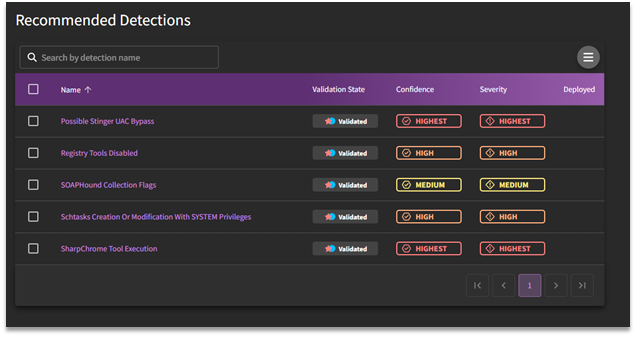

Recommended Detections

Recommended after-actions are presented under Recommended Detections so that teams can begin building a defense against potential exploitation of the threat in question. These recommendations are due to SnapAttack's recommender engine which matches criteria such as MITRE ATT&CK tag, confidence and severity level, and validation status.